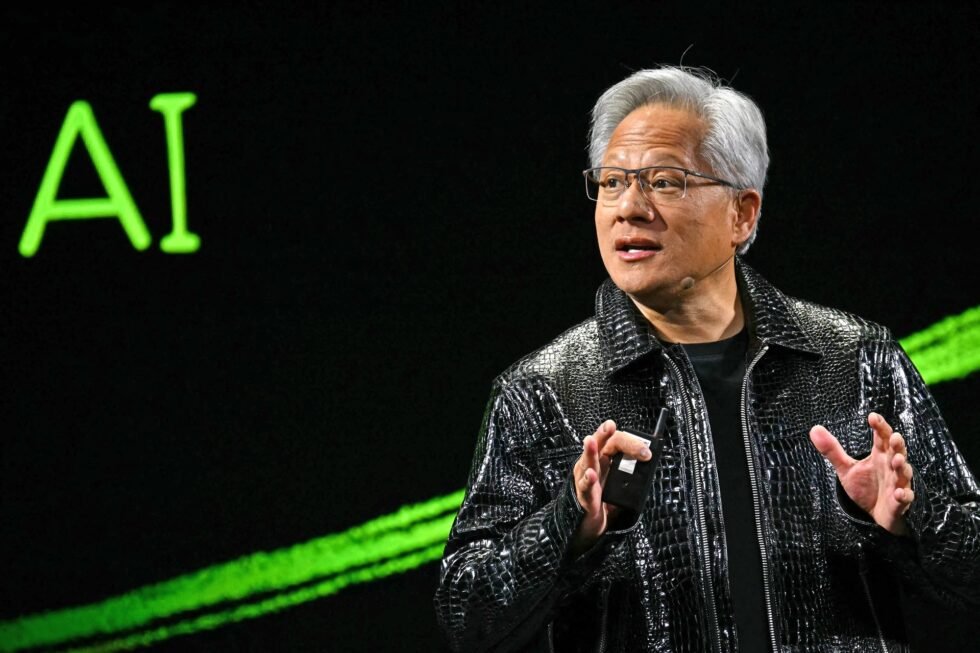

Nvidia’s decision to license AI inference technology from chip startup Groq signals an important shift in how the world’s leading AI chipmaker is approaching the next phase of artificial intelligence growth. Rather than relying solely on in-house development, Nvidia is turning to specialized partners to strengthen its position in one of AI’s fastest-growing segments: inference.

Inference refers to the process of running trained AI models in real-world applications — powering chatbots, recommendation systems, image recognition, and enterprise AI tools. While Nvidia has long dominated AI training with its powerful GPUs, inference presents a different set of challenges. Speed, predictability, energy efficiency, and low latency matter more than raw computing muscle, especially as AI moves from data centers into everyday products.

Groq has built its reputation around high-speed, deterministic inference chips designed to deliver consistent performance with minimal delays. Unlike traditional GPUs, which juggle many tasks at once, Groq’s architecture focuses on executing AI workloads in a highly predictable manner. This makes it particularly attractive for real-time applications where responsiveness is critical.

By licensing Groq’s technology, Nvidia gains access to specialized expertise without committing to a full acquisition or lengthy internal development cycle. The move suggests Nvidia recognizes that AI workloads are diversifying — and that no single architecture is optimal for every task. Instead of viewing startups as direct competitors, Nvidia is selectively integrating external innovations into its broader ecosystem.

This strategy also reflects the intense competition in the AI hardware space. Rivals are racing to offer alternatives that promise lower costs, faster inference, or better energy efficiency. Nvidia’s willingness to collaborate shows a pragmatic approach: protect its dominant position while adapting to emerging use cases that could redefine AI deployment.

For Groq, the licensing deal is a significant validation. Partnering with Nvidia elevates its technology’s credibility and expands its reach without requiring massive manufacturing scale. It also highlights how startups can influence industry giants by excelling in narrow, high-impact niches.

The agreement underscores a broader trend in the AI industry: inference is becoming just as important as training. As companies deploy AI at scale, efficiency and performance consistency matter more than ever. Hardware strategies must evolve accordingly.

Ultimately, Nvidia’s licensing deal with Groq illustrates how AI leadership today depends not only on size and dominance, but on flexibility. By blending its own strengths with external innovation, Nvidia is positioning itself for an AI future that is faster, more efficient, and far more varied than the one that came before.